What makes preprints popular?

Posted by Gautam Dey, on 31 January 2019

A team of preLights selectors respond to a meta-analysis of bioRxiv preprints.

Gautam Dey, Zhang-He Goh, Lars Hubatsch, Maiko Kitaoka, Robert Mahen, Máté Palfy, Connor Rosen and Samantha Seah*

*all authors contributed equally; cross-posted from here.

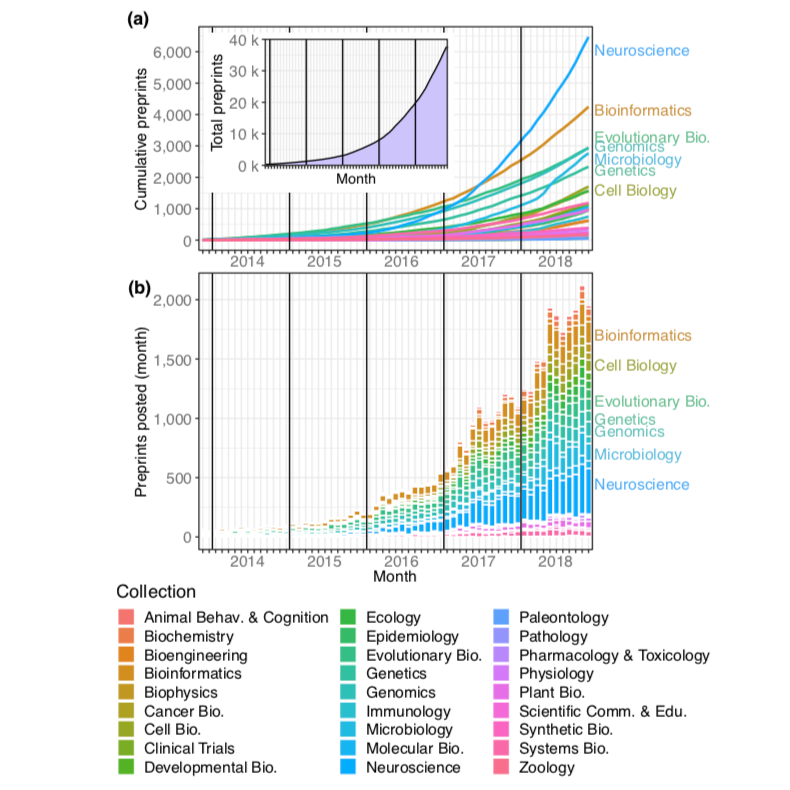

The growing adoption of preprints over the last five years in the biological sciences has driven discussion within the academic community about the merits, goals, and potential downsides of disseminating work prior to peer review. However, the community has lacked a systematic bibliometric analysis (Figure 1) of preprints in which to root these discussions. Abdill and Blekhman1 have generated an analysis of the data to identify trends in preprint usage, popularity, and outcomes, and created the website rxivist.org to facilitate future analysis and to provide an alternative platform for discovering actively discussed preprints.

As members of the preLights community who support the increased uptake of – and discussion about – preprints in the life sciences, we have taken this opportunity to reflect on how preprint success can be measured, and what the data provided by Abdill and Blekhman tell us about preprints in the life sciences.

What makes a preprint successful?

Citation rates have long served as the bibliometric gold standard for measuring the scientific impact of publications. However, follow-up studies and reviews can take years to make their own appearance in the literature, meaning that the impact of any one study based on citations can only be assessed in the long term (read: years to decades).

Standing in sharp contrast to this slow, cumulative view of scientific impact, the core goal of preprints is to accelerate the dissemination of scientific observations – to promote discussion, collaboration, and quick follow-up. In this sense, the ability of a preprint to reach the scientific community quickly and effectively is perhaps the ultimate measure of its success.

This alternate perspective justifies Abdill and Blekhman’s use of preprint downloads, Twitter activity and eventual publication outcomes as key quantitative metrics of preprint success, but these naturally raise some questions as well. Is using Twitter to define “popularity” acceptable? Our experience suggests Twitter is indeed the dominant social media platform for spreading preprints – but this does raise the possibility of excluding communities of scientists, and their opinions, based on a lack of Twitter presence. Our hope is that preprint curation platforms (such as preLights) will play an ever more important role in dissemination.

As with all bibliometric methods, it is important to keep in mind the potential for manipulation or misuse – as summarised in Goodhart’s Law, when a measure becomes a target, it ceases to be a good measure2. While we applaud the introduction of quantitative measures for preprint “success” in the rxivist project and the enablement of detailed analyses conducted by the authors, we are mindful that a small number of metrics should not be used to define a preprint without critical engagement and evaluation.

Social media and the dissemination of science

The use of Twitter activity as a metric for popularity of preprints on the rxivist website helps flag some issues of interest. First, biases in research communication using social media are poorly understood. For example, among scientists active on Twitter, there is widespread variation in the number of their Twitter followers3. As such, the interests of a single popular scientific influencer could potentially drive far greater disparities than are reflected in quality.

Second, preprinting opens up a space – until recently unexplored – for community engagement, spanning the gap between the dissemination of the authors’ unfiltered data and ideas, and the final peer-approved version. The bulk of this engagement takes place on social media platforms like Twitter. While the informal, loose, and relatively egalitarian structure of communicating this way can be immensely liberating, it is also easy to get lost in the noise, or to simply be overwhelmed by information overload. It is possible to go to sleep in the UK and miss a wide-ranging and critical discussion about a preprint taking place in US time zones – by the time you wake up, Twitter has moved on to the next big thing. In a world of science dissemination via social media, preprint curation and journal club initiatives (and the biorXiv comments section) must take on a critical role – that of providing a stable platform for sustained, publicly recorded engagement while remaining responsive to the abbreviated timescales driven by social media.

What happens, then, after the first few weeks, when the tweetstorms have settled, and the commentaries have been posted? We explore this in more detail below.

Time to publication

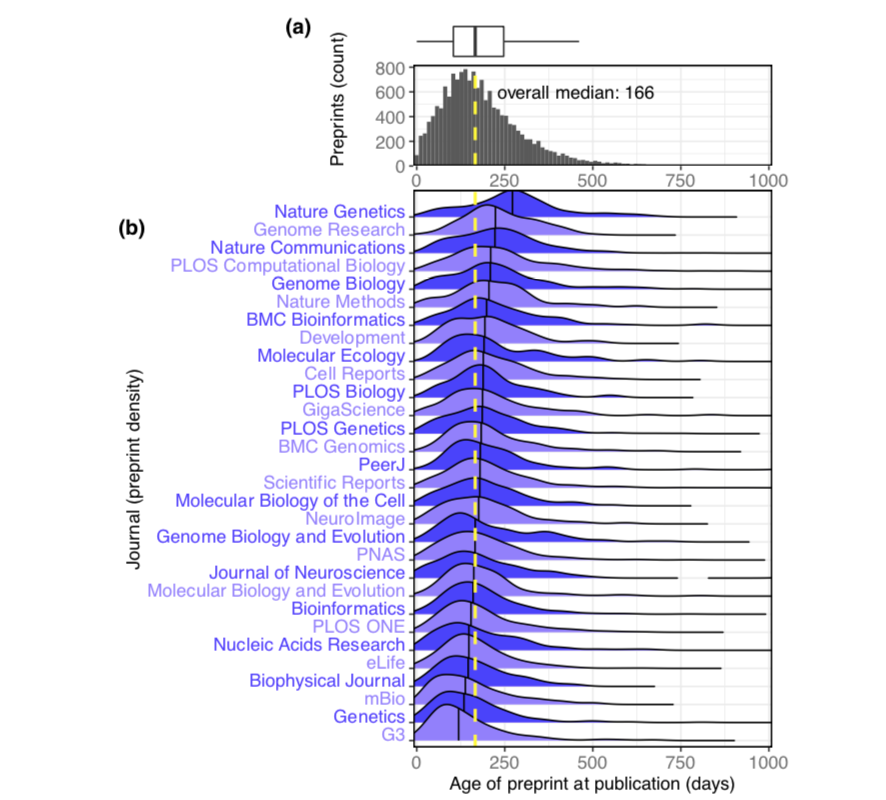

The eventual publication outcomes of preprints – their appearance in some peer-reviewed journal – feature heavily in the metrics utilised by Abdill and Blekhman. The authors found that two-thirds of bioRxiv preprints posted between 2013 and the end of 2016 were eventually published in peer-reviewed form. This high rate indicates that authors tend to post quality preprints, therefore initial fears that work not meeting certain scientific standards might ‘clog’ bioRxiv appear to be unfounded. The median time from the posting of a preprint on bioRxiv to its final publication in a peer-reviewed journal was just under six months, although this varied substantially, depending on the eventual journal of publication (Figure 2). In terms of accelerating science, pushing forward the public dissemination of new information by an average of six months could be considered a significant success- especially for early-career researchers, for whom six months represents a large fraction of their total career progress. Having their work and ideas publicly available enables them to receive feedback earlier and prepare effectively for career transitions.

However, as Abdill and Blekhman point out, “time-to-publication” is influenced by a plethora of factors, including journal behavior, when preprints are posted in the publication process, and whether preprints are ever published at all.

It’s worth noting that the two “slowest” and the two “fastest” journals (in terms of time from bioRxiv posting to final publication) both fall within the field of genetics and genomics – Genetics and G3 on the “fast” end and Nature Genetics and Genome Research on the “slow” end. This suggests that field-specific attitudes and norms about preprint usage do not drive the difference in publication times by journal.

Preprints can be posted at many stages of the publication process. More field-specific data on when authors typically post preprints would help us understand how authors are utilising preprints – are they sharing work as close to publication as possible, or aiming to get feedback on their work prior to submitting it to a journal? Although the total number of infractions appears to be small, it’s worth noting that some authors appear to be flaunting the bioRxiv guidelines that state that preprints must be uploaded prior to acceptance in a journal.

Publication as a readout of preprint quality

The authors find a significant correlation between preprint download counts and the impact factor of the eventual journal of publication. This suggests that the popularity of a preprint reflects the eventual perceived impact and quality of the work, and that there is some informal consensus among the scientific community about the scientific quality of both published and unpublished preprints. However, the authors rightly point out that publication, particularly in a high profile journal, may actually drive further downloads. Additionally, both metrics are susceptible to biases that distort the connection between popularity and quality – name recognition of the principal investigator, or “hot” topics within a field, for example. Therefore, further validation will be needed to determine how precisely this measure correlates with the scientific utility of a preprint. For instance, it will be interesting to examine whether the number of downloads a preprint receives in its first month holds predictive value for eventual publication.

On the other end of the scale, what about preprints that are never published in peer-reviewed form? Missing links to final publications in the rxivist website and analysis may be partly a technical issue – changes in authors or preprint title make it difficult for automated formats to match preprints to their final publications – but this is unlikely to account for many preprints. Instead, of greater concern is whether these unpublished preprints are of lower quality and are constructed on the back of poor science that would not pass the stringency of peer review. We argue that a lack of final publication should not be held as an indictment of the quality of a preprint. First, preprints help to communicate results more rapidly, particularly in instances where matching a manuscript to typical journal expectations may be difficult or impossible – for example, following the departure of the primary author from a lab. Second, preprints can serve as useful repositories of negative results, which often remain unpublished – after all, “a negative result is still a result”. Therefore, preprints communicating preliminary or shorter stories can prompt discussion and study in the field and be as successful as those that lead to full publication, especially if they invalidate previous hypotheses or drive changes in research that lead in different directions.

Conclusion

The explosive rise of bioRxiv preprints in the life sciences since 2013 has clearly demonstrated the importance of increased speed and visibility through the publishing process. Leveraging social media has also been key to this process. The rxivist project is a laudable effort to collect and collate data on preprint usage, which can in turn be used to measure the influence and uptake of preprints in the life sciences, while he rxivist website makes this data easily accessible and allows others to easily interact with the metadata. We believe the data indicate that preprints are generally successful and that their increasing adoption is a positive trend for not just the life sciences, but science as a whole. While the metrics for preprints diverge from those used for standard peer-reviewed publications, this appropriately reflects the difference between the standards and goals for preprints and that of peer-reviewed journal articles. We thank the authors of the rxivist project for their work, and are excited to watch as our understanding of preprint publishing continues to grow in the future.

References

- Abdill, R. J. & Blekhman, R. Tracking the popularity and outcomes of all bioRxiv preprints. bioRxiv 515643 (2019). doi:10.1101/515643

- Biagioli, M. Watch out for cheats in citation game. Nature 535, 201–201 (2016).

- Côté, I. M. & Darling, E. S. Scientists on Twitter: Preaching to the choir or singing from the rooftops? FACETS 3, 682–694 (2018).

(No Ratings Yet)

(No Ratings Yet)